This product is no longer sold. We no longer update these help pages, so some information may be out of date.

Amazon: S3 via Lambda

AWS Lambda allows you to run code independently of servers and run applications. When connected to AWS S3, events from S3 buckets can trigger Lambda functions.

You can connect your AWS S3 buckets to Log Management (InsightOps) in order to track the events and functions from AWS Lambda.

In order to connect them, you must:

- Obtain a Log Token

- Deploy a Script to AWS Lambda

- Upload Function Code

- Configure Log Management (InsightOps)

You can also utilize an example working code.

Before You Begin

Ensure that you are using Python’s Certifi. You can read more about certifi here: https://github.com/certifi/python-certifi .

Use Cases

You can utilize Log Management (InsightOps) with AWS Lambda in several different situations:

- Forward AWS ELB and CloudFront logs.

- Forward OpenDNS logs

Obtain a Log Token

Token-based input is a single TCP connection where each log line contains a token which uniquely identifies the destination log.

To obtain a log token:

- Log in to your Log Management (InsightOps) account.

- Follow the instructions here to obtain a log token: TCP Log Tokens.

Or, you can use an existing token to aggregate your logs.

Deploy a Script to AWS Lambda

Now you must create a function in AWS Lambda that will invoke a script to run and trigger an event for AWS S3 to track.

You must complete the following actions:

Configure Functions

Create a new function in AWS Lambda following the directions here: https://docs.aws.amazon.com/lambda/latest/dg/getting-started-create-function.html

To configure a new function:

- Log in to your AWS Console.

- From the Services dropdown, select Lambda from the Compute section.

- Click the orange Create function button on the right.

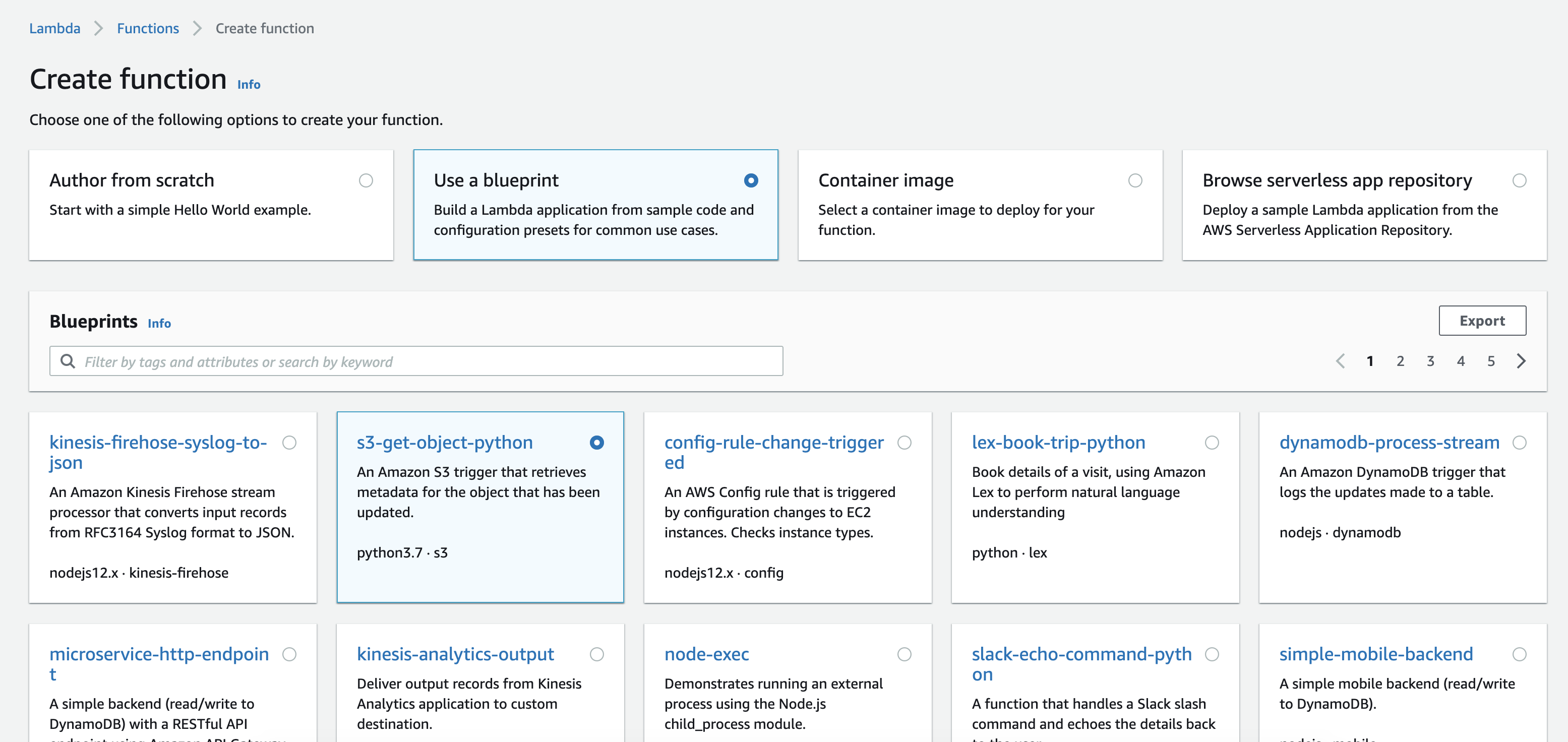

- Select Use a blueprint.

- In the Blueprints section, search for and select the s3-get-object-python blueprint.

- Click the Configure button.

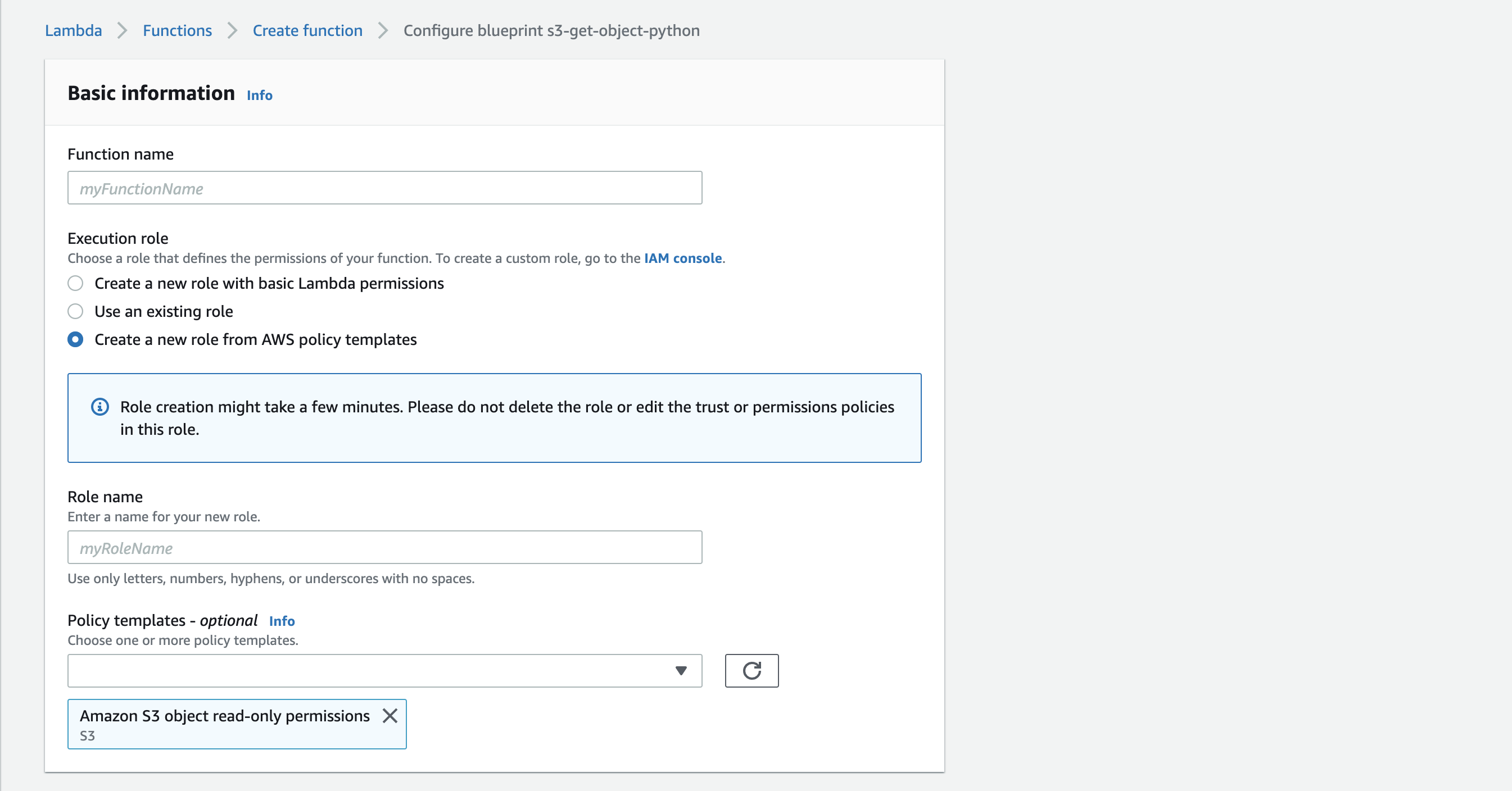

- In the Basic information section, specify a function name and create or select an execution role with S3 object read permissions.

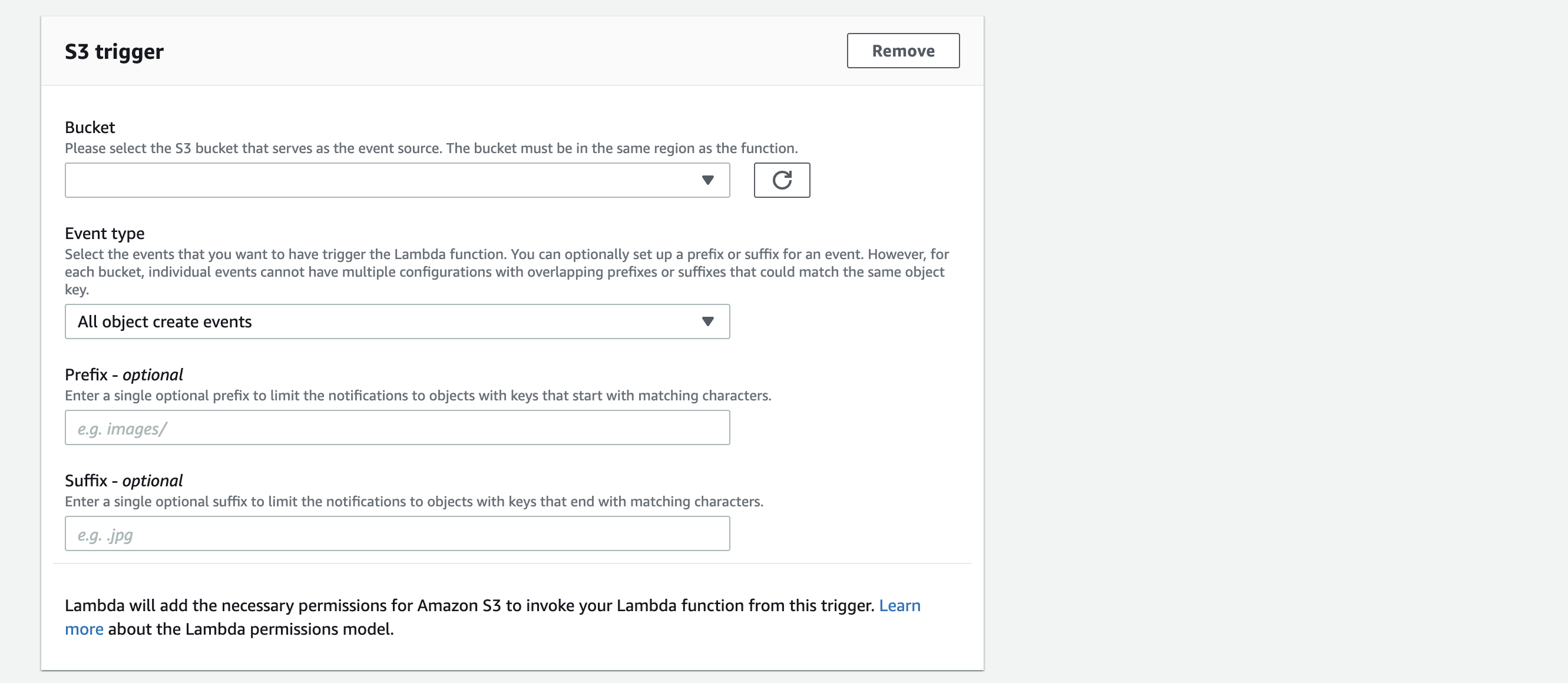

- In S3 Trigger section, select the S3 Bucket that stores the log data you want to use.

- For Event type, select All object create events.

- Leave the default Lambda function code for now.

- Click the Create function button.

Upload the Function Code

- On your asset, create a .ZIP file, containing

r7insight_lambdas3.pyand the foldercertifiwhich you can download here: https://github.com/rapid7/r7insight_lambdaS3- Make sure the files and certifi folder are in the root of the ZIP archive.

- In the Code Source section, click the Upload from dropdown button and select

.zip. - Select the archived zip file created in the previous step, and click Save.

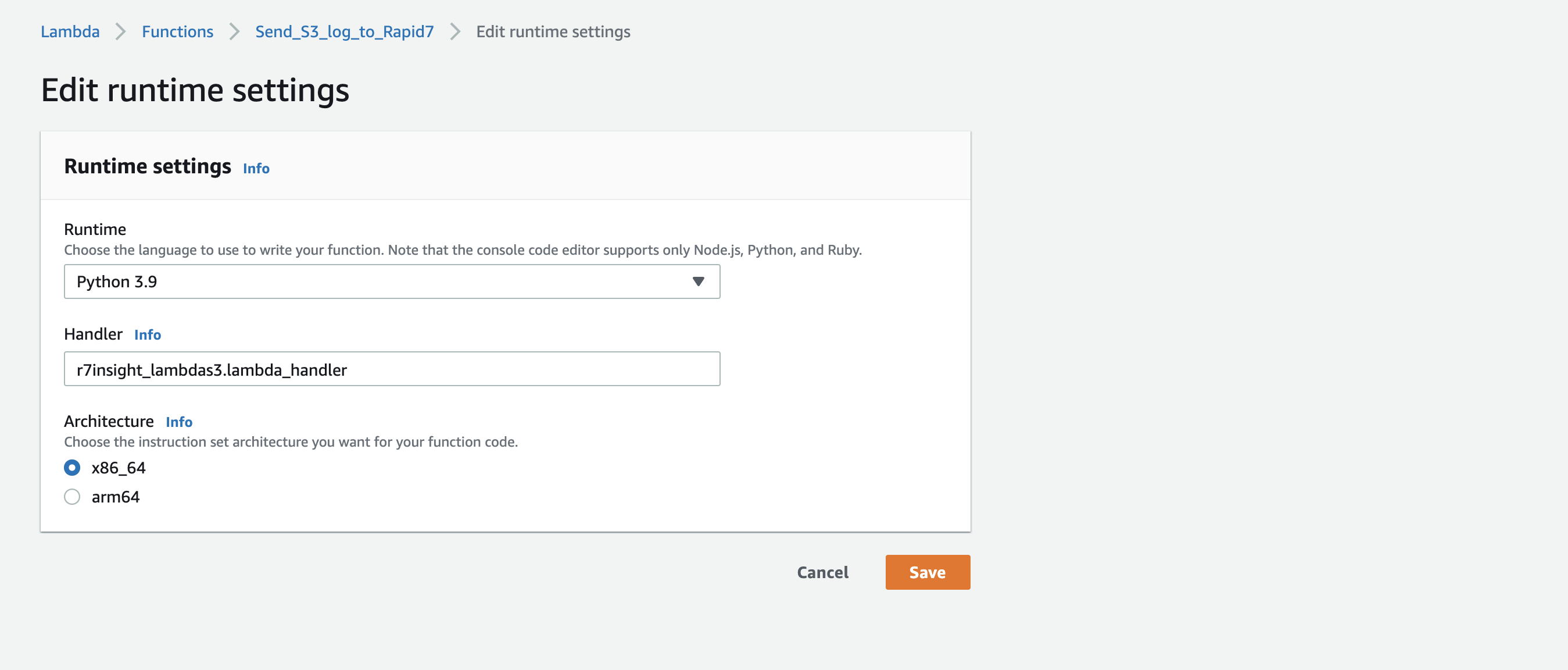

- In the Edit Runtime Settings section, select Python 3.9 as the runtime language.

- In the Handler field, set the handler name to

r7insight_lambdas3.lambda_handler.

- Click Save.

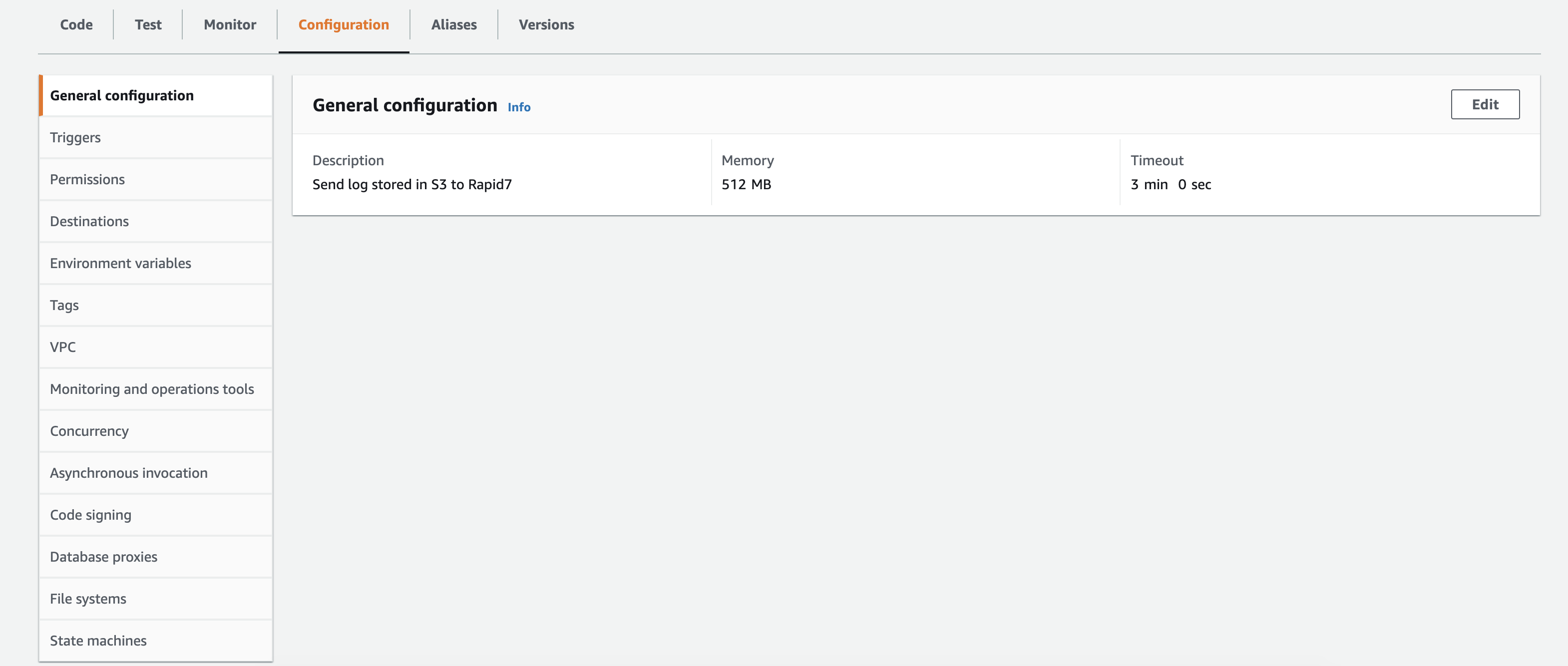

- Click the Configuration tab. In the General configuration section, set the value of memory and timeout necessary to parse and send every log file added to the S3 bucket. The values depend on the size and the format of the log files, it may be necessary to increase the memory and the timeout values if the lambda function fails.

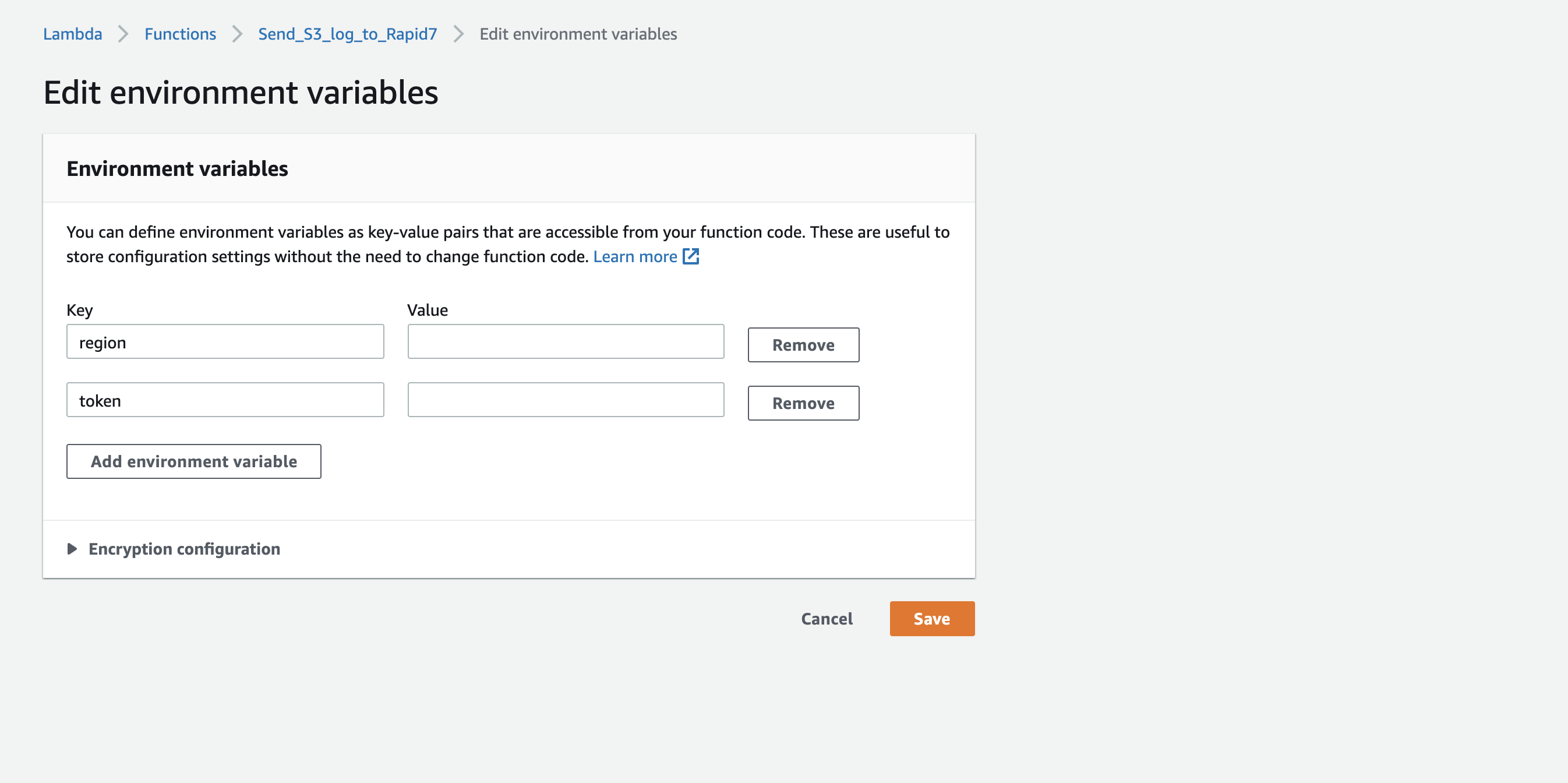

- In Environment Variables, add the following as a key - value pair:

- region - your Log Management (InsightOps) region (such as, eu or us)

- token - the token UUID you configured earlier

- You can test the function by adding a test file to the S3 bucket. The log events should be visible in Log Management (InsightOps) within few minutes, if not, please check lambda logs in Monitor tab.

The Lambda function will fire once the S3 bucket ingests log files.

Example Code

The following is an example of working code:

(root) - current AWS sets root directory name to your lambda function name automatically

├── certifi/

│ ├──

│ ├── __init__.py

│ ├── __main__.py

│ ├── cacert.pem

│ ├── core.py

│ ├── old_root.pem

│ └── weak.pem

├── r7insight_lambdas3.py`

``

Note: Zip files downloaded via github puts all the files under a subdirectory; therefore, uploading the downloaded zip directly to AWS will not work.Configure Data in Log Management (InsightOps)

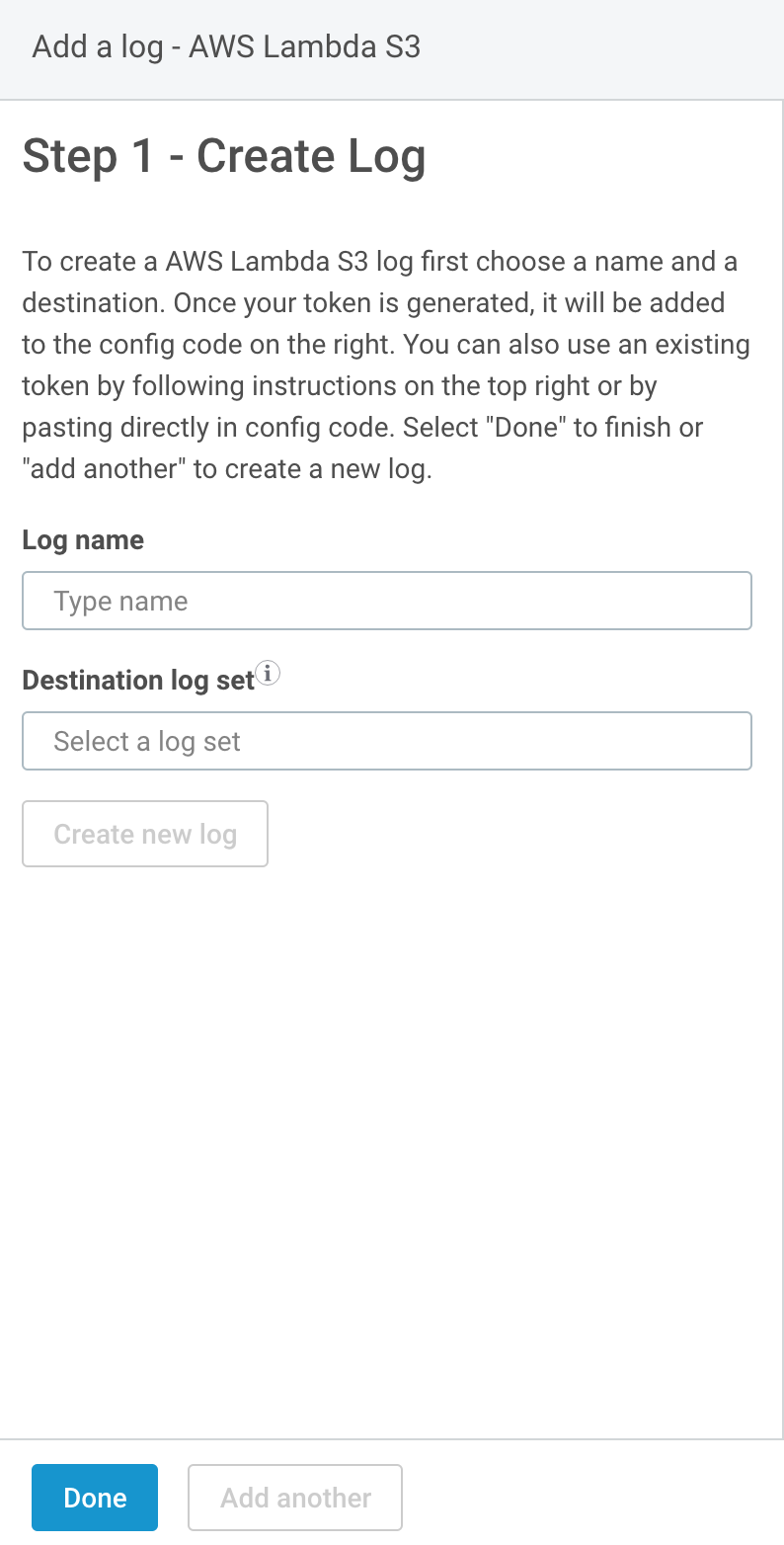

To finish the configuration for AWS Lambda via your S3 bucket:

- Log in to Log Management (InsightOps).

- Select the Data Collection page on the left hand menu.

- Select the AWS Lambda S3 icon from the System Data section.

- In the Log Name field, enter the name you want to see for this data in Log Search.

- Select an existing log set to add this log to, or type in a name to create a new log set.

- Click the Done button.

Once the AWS S3 bucket begins to ingest your log file data, the Lambda function will fire and you will see the logs reflected on the Log Search page. Logs appear in approximately 30 seconds and in the form of JSON.