Attack Restrictions

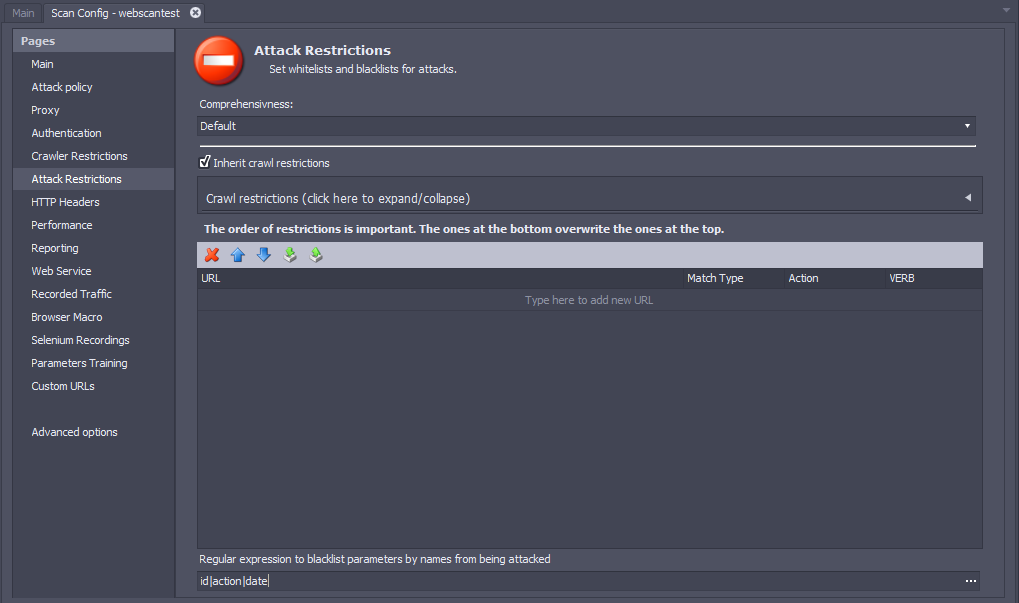

Crawling your entire web application helps you to understand your exposure. However, there may be certain areas of your application you don’t want to attack. You can set an allowlist or a denylist to control the portions of your application that can be attacked.

Note

Since the functionality on this screen is similar to the Crawler Restrictions screen, we recommend that you review the crawler restrictions documentation before creating attack restrictions.

Inherit Crawl Restrictions

You can choose to apply the same restrictions for crawling and attacking URLs by selecting the Inherit crawl restrictions option. Additionally, you can make your attack restrictions more restrictive so that vulnerability tests are only executed on a subset of the crawled pages. You can do this by adding restrictions in the Attack Restrictions table.

Regular Expression to Blacklist Parameters

The “Regular expression to blacklist parameters by name from being attacked” field allows you to specify a regular expression where you can blacklist parameters to exclude them from getting attacked. The benefit of this field is that you can apply the blacklist setting to every URL in the application.

For example, the expression id|action|date blacklists the parameters id, action, and date from getting attacked on any URL included in that scan.