SDK Guide

We have the following Python SDK images that form the basis of plugins. Each offer a slightly different development environment for different circumstances. When in doubt, use the Bullseye slim image rapid7/insightconnect-python-3-slim-plugin.

| Image | Python | OS | Notes |

|---|---|---|---|

| rapid7/insightconnect-python-3-slim-plugin | 3.9.18 | Bullseye 11 | Bullseye image (~180MB). Package manager is apt-get. |

| rapid7/insightconnect-python-3-plugin | 3.9.18 | Alpine 3.18.14 | Alpine image (~380MB). Package manager is apk |

For choosing the correct base image, think about any additional package dependencies or OS-specific behaviors you may need. E.g. the concise images do not include gcc and other build tools that you might need to install some dependencies. In that case, add the dependencies to the plugin’s Dockerfile.

$ cat jira/Dockerfile

FROM rapid7/insightconnect-python-3-plugin:5.3

RUN apk add --no-cache gcc libc-dev libffi-dev openssl-devNote that all images have pip for installing Python modules via requirements.txt in your plugin’s directory.

In your plugin’s Dockerfile, you can change the FROM line to choose the right base image for you from the options in the table above.

$ head -n 1 Dockerfile

FROM rapid7/insightconnect-python-3-slim-plugin:5.3We use major version tags to indicate backward-incompatible changes for our base images. Unless you always want your plugin to consume the latest base images, it’s best to specify the major tag in your FROM line. E.g. FROM rapid7/insightconnect-python-3-slim-plugin:5.3 says it will only use the 5th major version of this image. When developing a plugin, you can find the major tag version with docker images. It’s recommended to explicitly specify the major version tag that you tested and verified your plugin working with.

SDK Architecture

The plugin architecture defines the way a plugin is organized.

- Organizes each component into separate directories with code and schema split into their own files.

- Dependencies go into Dockerfile and requirements.txt

- Reusable code across the plugin is placed in the util directory

$ tree

.

├── Dockerfile

├── Makefile

├── acmecorp-example-1.0.0.tar.gz

├── bin

│ └── icon_example

├── run.sh

├── icon.png

├── icon_example

│ ├── __init__.py

│ ├── actions

│ │ ├── __init__.py

│ │ └── say_goodbye

│ │ ├── __init__.py

│ │ ├── action.py

│ │ └── schema.py

│ ├── connection

│ │ ├── __init__.py

│ │ ├── connection.py

│ │ └── schema.py

│ ├── triggers

│ │ ├── __init__.py

│ │ └── emit_greeting

│ │ ├── __init__.py

│ │ ├── schema.py

│ │ └── trigger.py

│ └── util

│ └── __init__.py

├── plugin.spec.yaml

├── requirements.txt

├── setup.py

└── tests

└── say_goodbye.json

9 directories, 22 filesPlugin Generation

As described in the Plugin Spec document, insight-plugin create <path/to/plugin.spec.yaml> generate the plugin skeletons.

If you decide to modify your plugin’s schema, by editing plugin.spec.yaml, you’ll need to regenerate the plugin skeleton with insight-plugin refresh. To see more about how to regenerate the plugin based on the newly modified yaml, refer to: Regenerating Plugins.

Parameters

Actions

A dictionary argument called params, contain input variables that are defined in the plugin.spec.yaml file. The value of the input can be accessed by the variable/key name constant which is codegenerated into the respective schema file:

def run(self, params):

api_key = params.get(Input.API_KEY) # Reflects a defined input called 'api_key'As this is a dictionary, it is recommended to access the value using params.get() because it:

- Allows an optional default value of the key is missing

- Returns

Noneif the key is missing whereasparams['var']would raiseKeyError.

Note that the code generated constants will only be available for the first level of any potential variable nesting.

Triggers

Triggers are long-running processes that poll for / emit a new event and then send the event to the engine to kick off a workflow. Because of how workflows get fed data, all workflows start with a trigger. When a workflow is activated, the trigger code is executed in a Docker container. As the trigger initializes, the connection object gets created and then the trigger enters a loop where it waits for a message or polls for new data.

Unlike Actions, Triggers do not return anything based on their input - instead, they perform some operation based upon it, then ferry it to the engine.

If triggers were configured via the spec, they will be present at

<plugin_name>/icon_<plugin_name>/triggers/<Table.Trigger_name>/trigger.py

Code

Trigger code should be placed in the body of the trigger run loop.

You can adjust the timer to suit the plugins needs. By default, it emits an event every 5 seconds.

The self.send() method accepts a dictionary and is the function used to pass the dictionary to the Engine to kick off a workflow. This dictionary, now JSON object, is then available to other plugins via the UI.

def run(self, params={}):

"""Run the trigger"""

# send a test event

while True:

"""TODO implement this"""

self.send({})

time.sleep(5)Testing Triggers

Testing triggers using Docker requires the use of the --verbose option. Otherwise, the trigger will fail due to atttempts to post events to an http URL that’s not available.

Connections

Individual plugin authors are responsible for writing the actual code to manage the connections. The generated code is simply a placeholder to show you where you fill your code in correctly.

You can access the connection variables defined in plugin.spec.yaml while in connection.py using a dictionary called params. The value of the input can be accessed by the variable/key name constant which is codegenerated into the respective schema file:

def connect(self, params):

hostname = params.get(Input.HOSTNAME) # Reflects a defined input called 'hostname'You also need to access the connection variables in the run() method to get the connection info. It’s similar to the other examples.

def run(self, params):

value = self.connection.varNote that the codegenerated constants will only be available for the first level of any potential variable nesting. In the case of a username_password credential type, the codegenerated constant will only be present for the top-level variable. An example is as follows:

def connect(self, params):

username_pass = params.get(Input.CREDENTIALS) # A dictionary with username and password

username, password = username_pass.get('username'), username_pass.get('password')

Logging

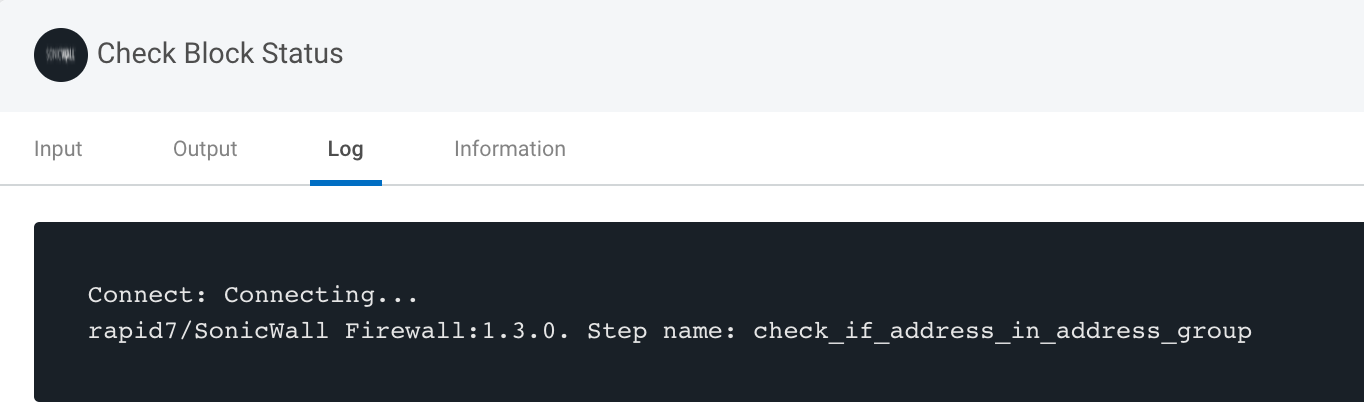

Log informational messages including warnings and errors are displayed to the user in the Log section of the Job Output.

Informational logging can be done via the self.logger helper which is an instance of Python’s logging module. This is required to allow the log stream to make it to the product UI. A few examples are below.

Additionally, you can also log via raising an Exception, however this will cause the plug-in to fail. See Errors

Note: You cannot use print() or other stdout methods for displaying text. This is because the product expects the SDK formatted JSON payload on stdout.

self.logger.info("Connecting")

self.logger.error(e)

self.logger.error("{status} ({code}): {reason}".format(status=status_code_message, code=response.status_code, reason=reason))

self.logger.debug("Self-signed certificate would not validate")

raise PluginException(cause='Unable to reach Jira instance at: %s." % self.url',

assistance="Verify the Jira server at the URL configured in your plugin connection is correct.")For exceptions, use PluginException or ConnectionTestException to standardize on error format.

Errors

Please see the new error handling guide.

Cache

Plugins can use persistent storage for caching files when enable_cache: true is set in the metadata section of plugin spec file. This is useful in cases where a developer needs to keep state such as when downloading files from the internet or polling for events from an API.

When the cache is enabled, persistent storage is available in the following locations depending on the product:

- Komand host:

/opt/komand/var/volumes/global/komand-red_canary-0.1.0(no longer supported) - Automation (InsightConnect) Orchestrator host:

/opt/rapid7/orchestrator/var/cache/plugins/rapid7_microsoft_office365_email_3.0.0/

A plugin’s global cache directory is bind mounted in a plugin’s container as /var/cache. From the plugin’s perspective, reading and writing caching data must occur in /var/cache.

/var/cache is used for storage across all the plugin’s containers but not in containers of other plugins e.g. plugin-A cannot access plugin-B’s cache.

On GNU/Linux, the cache can be tested by manually bind mounting the directory via:

docker run -v /var/cache:/var/cache -i komand/myplugin:1.0.0 --debug run < tests/blah.jsonIn cache debugging situations, with Docker running on MacOS, you can run the following on MacOS to access the plugin’s cache directory:

screen ~/Library/Containers/com.docker.docker/Data/com.docker.driver.amd64-linux/tty

cd /var/cacheFor interacting with the cache, you can take one of two routes depending on your need

You can directly interact with it via the filesystem, which is beneficial when working with other client libraries that don’t work with file descriptors or require more complex logic:

import os

cache_dir = '/var/cache'

cache_file = cache_dir + '/' + 'mycache'

if os.path.isdir(cache_dir):

if os.path.isfile(cache_file):

f = open(cache_file, 'rw')

contents = f.read()

"""Do comparison"""

else:

"""Create cachefile for next time"""You can also use the provided helpers, which are a little simpler to work with, but are limited to only providing file descriptors

fisle = insightconnect_plugin_runtime.helper.open_cachefile('/var/cache/mycache')

komandinsightconnect_plugin_runtime.helper.remove_cachefile('/var/cache/mycache')

True

komandinsightconnect_plugin_runtime.helper.check_cachefile('/var/cache/mycache')

FalseMore information in the Helper Library at the bottom of this page.

Tests

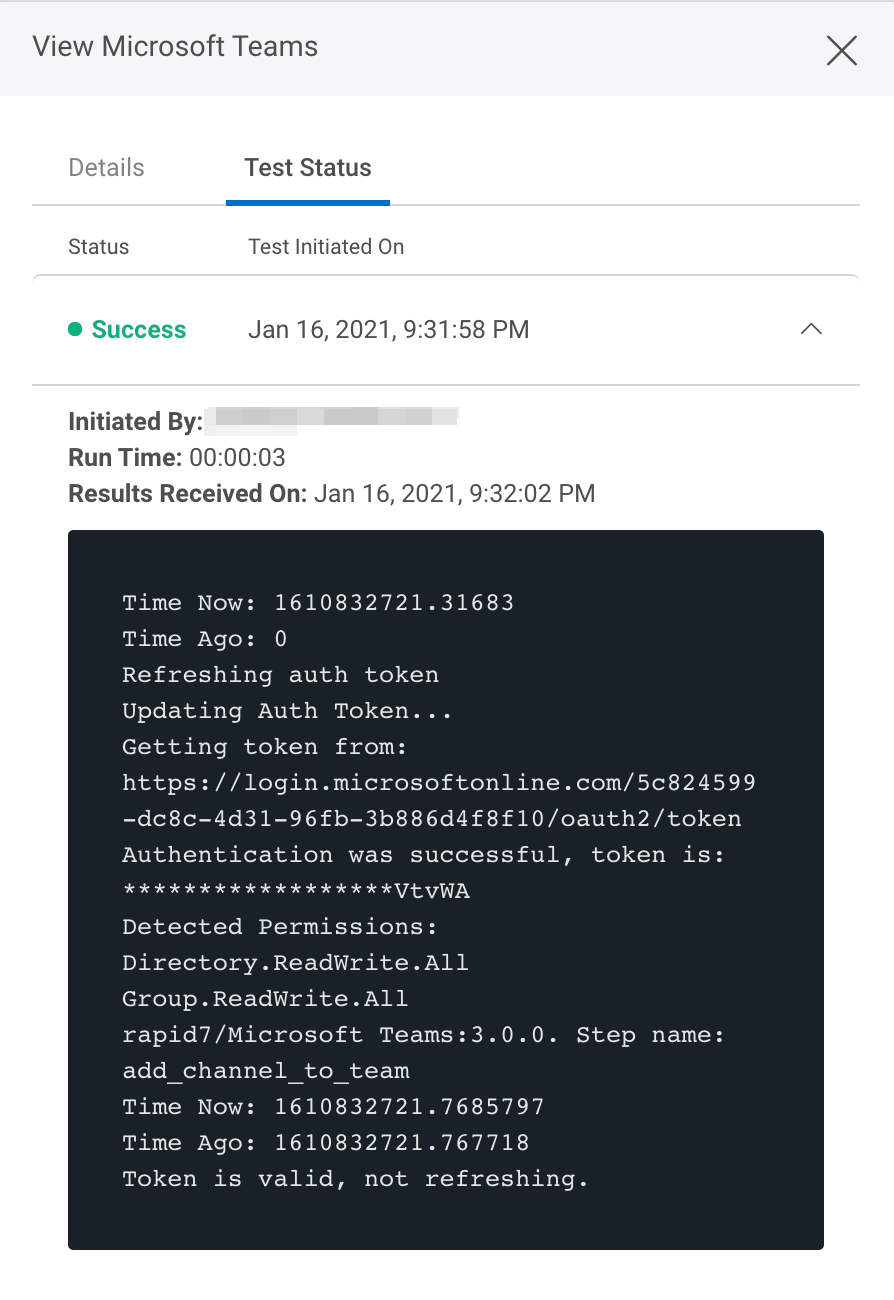

The test method resides in connection.py and is used to provide a test of the plugin such as authenticating to an API to verify the credentials were entered correctly. The test method is run before a plugin step is saved to a workflow. It should be completed with practical test(s) of dependent plugin functionality such as network access.

Raising an exception will cause the test method to fail.

def test(self):

auth = HTTPBasicAuth(username=self.username,

password=self.password)

response = requests.get(self.url, auth=auth)

# https://developer.atlassian.com/cloud/jira/platform/rest/v2/?utm_source=%2Fcloud%2Fjira%2Fplatform%2Frest%2F&utm_medium=302#error-responses

if response.status_code == 200:

return True

elif response.status_code == 401:

raise ConnectionTestException(preset=ConnectionTestException.Preset.USERNAME_PASSWORD)

elif response.status_code == 404:

raise ConnectionTestException(cause="Unable to reach Jira instance at: %s." % self.url,

assistance="Verify the Jira server at the URL configured in your plugin "

"connection is correct.")

else:

self.logger.error(ConnectionTestException(cause="Unhandled error occurred: %s" % response.content))Tests are executed from the command-line during development or from the WUI in production after configuring a plugin. A log of the JSON output is also viewable.

For more detail and examples on testing your plugin, see Running Plugins

Functions

Functions are top level objects that aren’t attached to anything.

Use good programming practices such as breaking the program into smaller functions. This makes the plugins more readable and manageable.

Functions and methods that are shared across action|trigger files should be added to the util directory.

The compression plugin has many shared modules in the util directory.

$ tree ./compression/komand_compression/util

./compression/komand_compression/util

├── __init__.py

├── algorithm.py

├── compressor.py

├── connection.py

├── decompressor.py

└── utils.pyThese modules can then be imported in actions|trigger files for use:

$ grep util compression/komand_compression/actions/compress_bytes/action.py

from ...util import utils, compressor

file_bytes = utils.base64_decode(file_bytes_b64) # Decode base64 so we can manipulate the file

compressed_b64 = utils.base64_encode(compressed)

In older v1 plugins, we recommended creating a utils.py or helper.py file in the actions|triggers directory with your method and importing it in the respective actions|triggers files.

Methods

Methods are functions that are attached to an object, as opposed to available freely in the global scope. The main difference here is that Methods are able to access the local (and potentially private) state of the object they’re attached to.

As with functions, use good programming practices such as breaking the program into smaller pieces. This makes the plugins more readable and manageable.

Because of this, you should avoid defining custom methods on generated objects where possible, and stick to utils which are easier to recover.

An example below is provided that we can use instead of doing a bunch of re.search‘s and conditionals to test the existence of a value. The regex in the get_value method extracts the value from the \nkey: value pair match in the stdout string.

def get_value(self, key, stdout):

'''Extracts value from key: value pair'''

'''Example: regex = "\nDomain Name: (google.com)\n"'''

regex = r"\n" + re.escape(key) + r": (.*)\n"

r = re.search(regex, stdout)

'''Only return the value in the group 1 if it exists'''

if hasattr(r, 'group'):

if r.lastindex == 1:

return r.group(1)

...

def run(self, params={}):

'''Initialize list with keys for matching'''

keys = [

'Domain Name',

'Registrar WHOIS Server',

'Updated Date',

'Creation Date',

'Registrar',

'Registrar Abuse Contact Email',

'Registrar Abuse Contact Phone',

'Registrant Country',

]

for key in keys:

'''Iterate over keys and store the extracted values into results'''

results[key] = self.get_value(key, stdout)

return resultsOnce you define the function you can call it in the same python file by referring to itself e.g. self.get_value(...)

Meta

The Meta object contains metadata from the workflow that the plugin is being called from. It’s passed by the product to the plugin SDK and then reaches the plugin as an object called meta.

Available Parameters:

| Parameters | Description |

|---|---|

| shortOrgId | Short version of the Organization ID |

| orgProductToken | Organization Product Token |

| uiHostUrl | Job URL for triggers |

| jobId | Job UUID |

| stepId | Step UUID |

| versionId | Workflow Version UUID |

| nextStepId | Next Step UUID |

| nextEdgeId | Next Step UUID |

| triggerId | Trigger UUID |

| jobExecutionContextId | Job Execution Context UUID |

| time | Time the action or trigger was executed |

| connectionTestId | Connection Test ID |

| connectionTestTimeout | Connection Test Timeout |

| workflowId | Workflow ID |

These parameters are accessible through the meta object that’s set in the connection object. They are set on run of a plugin action or trigger or through a JSON test file.

Example code below accesses workflow details in the connection meta object retrieving the value for shortOrgId.

self.connection.meta.workflow.shortOrgIdNote that data within the meta object may be set to None or null as Komand and Automation (InsightConnect) workflow meta data are not a 1 to 1 in whats returned. You should test for the presence of value before use in your code.

A good use case for this data is when using the cache, you can keep track of multiple caches due to use of plugin in more than one workflow by using the workflowId property in the cache file name.

Helper Library

The SDK provides some simple builtin utility functions, which are defined below. You can use these in any of the hooks for running or testing actions and triggers in the generated code

To make use of the helpers, import the komand namespace.

You can make use of dir python builtin to fund out more about a specific function

dir(insightconnect_plugin_runtime.helper.clean_dict)

You can also use an sdk builtin help method to display information in the plugin output, although this is for testing only and will result in an error in the plugin. Avoid leaving these calls in the final plugin. You should strive to rely on the official documentation where possible.

help(insightconnect_plugin_runtime.helper.clean_dict)

clean_dict(dict)

Takes a dictionary as an argument and returns a new, cleaned dictionary without the keys containing None types and empty strings.

>>> a = { 'a': 'stuff', 'b': 1, 'c': None, 'd': 'more', 'e': '' }

"""Keys c and e are removed"""

>>> insightconnect_plugin_runtime.helper.clean_dict(a)

{'a': 'stuff', 'b': 1, 'd': 'more'}clean_list(list)

Takes a list as an argument and returns a new, cleaned list without None types and empty strings.

>>> lst = [ 'stuff', 1, None, 'more', '', None, '' ]

>>> insightconnect_plugin_runtime.helper.clean_list(lst)

['stuff', 1, 'more']clean(list|dict)

Takes a list or dict as an argument, recursively cleans it, removing all keys with a None and empty string values and returns a new data structure

>>> person = { "id" : 1, "first_name": "Sallee", "last_name": null, "email": "sfidler0@taobao.com", "gender": null }

>>> insightconnect_plugin_runtime.helper.clean(dict)

{ "id" : 1, "first_name": "Sallee", "email": "sfidler0@taobao.com" }

>>> animals = [ "dog", null, "bird", "fish", null ]

>>> insightconnect_plugin_runtime.helper.clean(animals)

[ "dog, "bird", "fish" ]open_file(path)

Takes a file path as a string to open and returns a file object on success or None

>>> f = insightconnect_plugin_runtime.helper.open_file('/tmp/testfile')

>>> f.read()

b'test\n'check_cachefile(path)

Takes a string of the file path to check

>>> insightconnect_plugin_runtime.helper.check_cachefile('/var/cache/mycache')

True

"""This works too, /var/cache is not required"""

>>> insightconnect_plugin_runtime.helper.check_cachefile('mycache')

True

>>> insightconnect_plugin_runtime.helper.check_cachefile('nofile')

Falseremove_cachefile(path)

Takes a file path as a string

>>> os.listdir('/var/cache')

['test']

>>> insightconnect_plugin_runtime.helper.remove_cachefile('test')

True

>>> os.listdir('/var/cache')open_cachefile(file)

Takes a file path as a string

>>> f = insightconnect_plugin_runtime.helper.open_cachefile('/var/cache/test')

>>> f.read()

'stuff\n'

>>> os.listdir('/var/cache')

[]

>>> f = insightconnect_plugin_runtime.helper.open_cachefile('/var/cache/myplugin/cache.file')

"""The file has been created"""

>>> insightconnect_plugin_runtime.helper.check_cachefile('/var/cache/myplugin/cache.file')

Truelock_cache(file)

Takes a file path as a string

>>> f = insightconnect_plugin_runtime.helper.lock_cache('/var/cache/lock/lock1')

>>> f

Trueunlock_cache(file, delay)

Takes a file path as a string and a delay length in seconds as an int or float

>>> delay = 60

>>> f = insightconnect_plugin_runtime.helper.unlock_cache('/var/cache/lock/lock1', delay)

"""Sixty seconds later"""

>>> f

True

>>> file_name = '/var/cache/lock/lock1'

>>> f = insightconnect_plugin_runtime.helper.unlock_cache(file_name, 60)

"""Sixty seconds later"""

>>> f

Trueget_hashes_string(str)

Returns a dictionary of common hashes from a string

>>> insightconnect_plugin_runtime.helper.get_hashes_string('thisisastring')

{u'sha256': '572642d5581b8b466da59e87bf267ceb7b2afd880b59ed7573edff4d980eb1d5', u'sha1':

'93697ac6942965a0814ed2e4ded7251429e5c7a7', u'sha512':

'9145416eb9cc0c9ff3aecbe9a400f21ca2b99c927f63a9a245d22ac4fe6fe27036643e373708e3bdf7ace4f3b52573182ec6d1f38c7d25f9e06144617ad1cdc8',

u'md5': '0bba161a7165a211c7435c950ee78438'}check_hashes(src, checksum)

Returns a boolean on whether checksum was a hash of provided string

>>> insightconnect_plugin_runtime.helper.check_hashes('thisisastring', '0bba161a7165a211c7435c950ee78438')

True

>>> insightconnect_plugin_runtime.helper.check_hashes('thisisanotherstring', '0bba161a7165a211c7435c950ee78438')

Falseextract_value()

Takes 4 arguments that regexes/patterns as strings

>>> string = '\n\tShell: /bin/bash\n\t'

>>> insightconnect_plugin_runtime.helper.extract_value(r'\s', 'Shell', r':\s(.*)\s', string)

'/bin/bash'open_url(url)

Takes a URL as a string and optionally a timeout as an int, verify as a boolean, and a dictionary of headers

A urllib2 obj is returned upon success. None is returned if a 304 Not modified is the response.

>>> urlobj = insightconnect_plugin_runtime.helper.open_url('http://google.com')

>>> urlobj.read()

'<!doctype html><html itemscope="" itemtype="http://schema.org/WebPage" lang="en"><head><meta content="Se...'

>>> urlobj = insightconnect_plugin_runtime.helper.open_url(url, Range='bytes=0-3', Authorization='aslfasdfasdfasdfasdf')

>>> urlobj.read()

Auth

>>> urlobj = insightconnect_plugin_runtime.helper.open_url(url, timeout=1, User_Agent='curl/0.7.9', If_None_Match=etag)

ERROR:root:HTTPError: 304 for http://24.151.224.211/ui/1.0.1.1038/dynamic/login.html

>>> type(a)

<type 'NoneType'>

>>> urlobj = insightconnect_plugin_runtime.helper.open_url(url, User_Agent='curl/0.7.9', If_Modified_Since=mod)

ERROR:root:HTTPError: 304 for http://24.151.224.211/ui/1.0.1.1038/dynamic/login.htmlget_url_filename(url)

Takes a URL as a string, returns filename as string or None

>>> url = 'http://www.irongeek.com/robots.txt'

>>> insightconnect_plugin_runtime.helper.get_url_filename(url)

'robots.txt'

>>> insightconnect_plugin_runtime.helper.get_url_filename('http://203.66.168.223:83/')

'Create_By_AutoWeb.htm'

>>> if insightconnect_plugin_runtime.helper.get_url_filename('http://www.google.com') is None:

... print 'No file found'

No file foundcheck_url(url)

Takes a URL as a string, returns boolean on wheather we can access url successfully

>>> url = 'http://google.com'

>>> insightconnect_plugin_runtime.helper.check_url(url)

True

>>> url = 'http://invalid-url-123.com'

>>> insightconnect_plugin_runtime.helper.check_url(url)

Falsecheck_url_modified(url)

Takes a URL as a string, returns boolean on whether the url has been modified

>>> url = 'http://google.com'

>>> insightconnect_plugin_runtime.helper.check_url_modified(url)

Falseget_url_path_filename(url)

Takes a URL as a string, returns filename from url as string if we have a file extension, otherwise return None

>>> insightconnect_plugin_runtime.helper.get_url_path_filename('http://www.irongeek.com/robots.txt')

'robots.txt'encode_file(file_path)

Takes a file path as a string, returns a string of base64 encoded file provided as an absolute file path

>>> insightconnect_plugin_runtime.helper.encode_file('./test_file')

b'VGhpcyBpcyBhIHRlc3QgZmlsZS4K'encode_string(s)

Takes a string, returns a base64 encoded string given a string

>>> insightconnect_plugin_runtime.helper.encode_string('example_string')

b'ZXhhbXBsZV9zdHJpbmc='exec_command(path_with_args)

Takes a command and its arguments as a string

>>> insightconnect_plugin_runtime.helper.exec_command('/bin/ls')

{'rcode': 0, 'stderr': '', 'stdout':

'GO.md\nPYTHON.md\nREADME.md\nSPEC.md\nball.pyc\nimgs\nold.py\nplugins.py\nplugins.pyc\nstatic.py\nstatic.pyc\n'}